A more advanced task for the computer would be predicting an offender’s likelihood of committing another crime. That’s the job for an AI system called COMPAS (Correctional Offender Management Profiling for Alternative Sanctions). But it turns out that tool is no better than an average bloke, and can be racist too. Well, that’s exactly what a research team has discovered after extensively studying the AI system which is widely used by judicial institutions. According to a research paper published by Science Advances, COMPAS was pitted against a group of human participants to test its efficiency and check how well it fares against the logical predictions made by a normal person. The machine and the human participants were provided 1000 test descriptions containing age, previous crimes, gender etc. of offenders whose probability of repeat crimes was to be predicted.

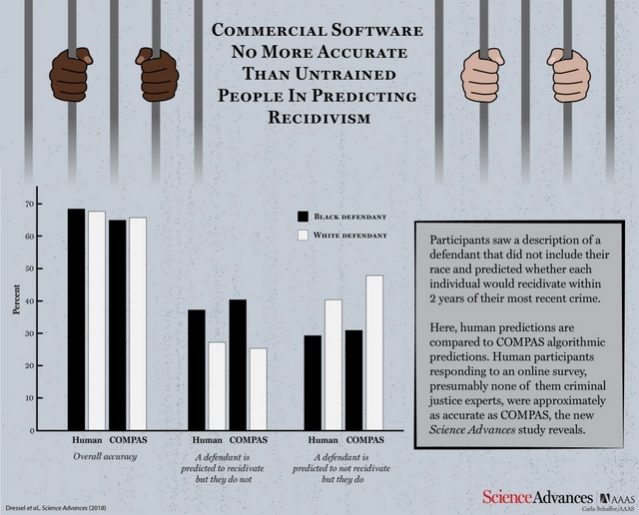

COMPAS clocked an overall accuracy of 65.4% in predicting recidivism (the tendency of a convicted criminal to re-offend), which is less than the collective prediction accuracy of human participants standing at 67%. Now just take a moment and reflect in your mind that the AI system, which fares no better than an average person, was used by courts to predict recidivism.

What’s even worse is the fact that the system was found to be just as susceptible to racial prejudice as its human counterparts when they were asked to predict the probability of recidivism from descriptions which also contained racial information of the offenders. You shouldn’t be too surprised there, as AI is known to take on the patterns that its human teachers will program it to learn. Although both the sides fall drastically short of achieving an acceptable accuracy score, the point of using an AI tool which is no better than an average human being raises many questions.